This Easter bank holiday, I spent some time working on a little problem I have at work with a simulation I’m writing in Python.

It’s basically a Monte-Carlo simulation which is performing billions of calculations. To be more exact, the simulation is a script that performs about half a million of calculations and it has to run a few hundreds of thousands of times. In other words, a lot of number crunching… And as my bosses are a bit impatient, it has to be done quickly, i.e. in hours as opposed to days.

I was running benchmarks at work with various machines (an old G5, a new intel duo laptop and and old P3 desktop). Based on my benchmarks, the old P3 desktop would need approx 10 days to complete the required calculations and it became clear that, if I want to complete these calculations within a reasonable amount of time, I will need a significant amount of processing power.

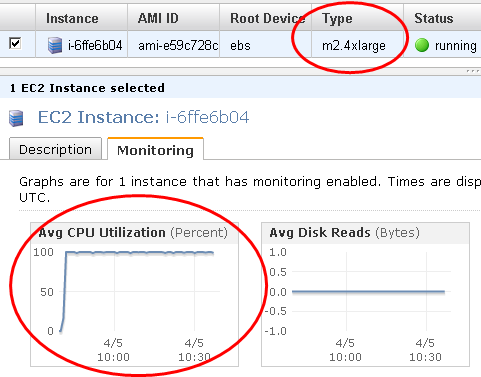

Over the weekend, I decided to try and quantify/benchmark the performance of Amazon’s EC2 service. I used the m2.4xlarge option (“High-Memory Quadruple Extra Large Instance 68.4 GB of memory, 26 EC2 Compute Units (8 virtual cores with 3.25 EC2 Compute Units each” per Amazon’s description), 1690 GB of local instance storage, 64-bit platform”) which gives the highest computing power. To my surprise, the python script was running on this virtual machine just slightly faster than when it was running on my laptop. After having a look at the monitoring service Amazon offers, I realised that less than 20% of the computing power of this VM was used while running the model…

After a bit of searching/googling/reading, I found that

– My python script was essentially only using one of the cores of the VM machine, in other words 1/8th of it’s capacity.

– To use many processors efficiently one could use the multiprocessor package which is available in version 2.6, but this really complicates things and introduces new levels of complexity on my project.

– I also tried Parallel Python, but I didn’t see any significant changes in the CPU usage.

– A very interesting video on this issue (Python GIL) from David Beazley can be found here.

After reading all this and spending a lot of time experimenting, I took a step back and tried to think this through. As far as my project was concerned and due to the way a Monte Carlo simulation works, my 50 billion calculations were actually 100,000 repetitions of half a million calculations,but these repetitions were independent of each other. In other words, they could run concurrently.

In the end, a simple bash script solved this problem:

#!/bin/bash

for i in {0..1000}

do

python 50M.pyx &

done

In the above script, I was running 1,000 times a simple script (50M.pyx) which contains a loop of 100 iterations of my main set of calculations (half a million calculations). In other words, (1,000 x 100 x 500,000 = ) 50 billion calculations.

The ampersand at the end of the python line indicates that the shell should not wait for the command to finish before it moves on. As a result, it practically launches 1,000 simultaneous instances of the 50M.pyx script. With this simple method, the powerful VM server is working to the max:

It ended up taking 9 hours 13 mins to run 50 billion calculations on this machine. On an old P3 Dell desktop this would take approx 10 days, so the lesson is that, if we use many python instances that run concurrently and a (much much) better machine, there is a significant improvement in performance. Am I stating the obvious? 🙂

When my simulation is ready, I will probably use 10 instances to get the calculations finished in less than 1 hour.